DDoS attacks can flood servers with excessive traffic, disrupting service for legitimate users. Rate limiting is a crucial method to defend against DDoS (Distributed Denial of Service) attacks. By controlling traffic patterns, servers can effectively handle malicious activity while ensuring uninterrupted access for genuine users.

What is the Rate Limit for DDoS Attacks?

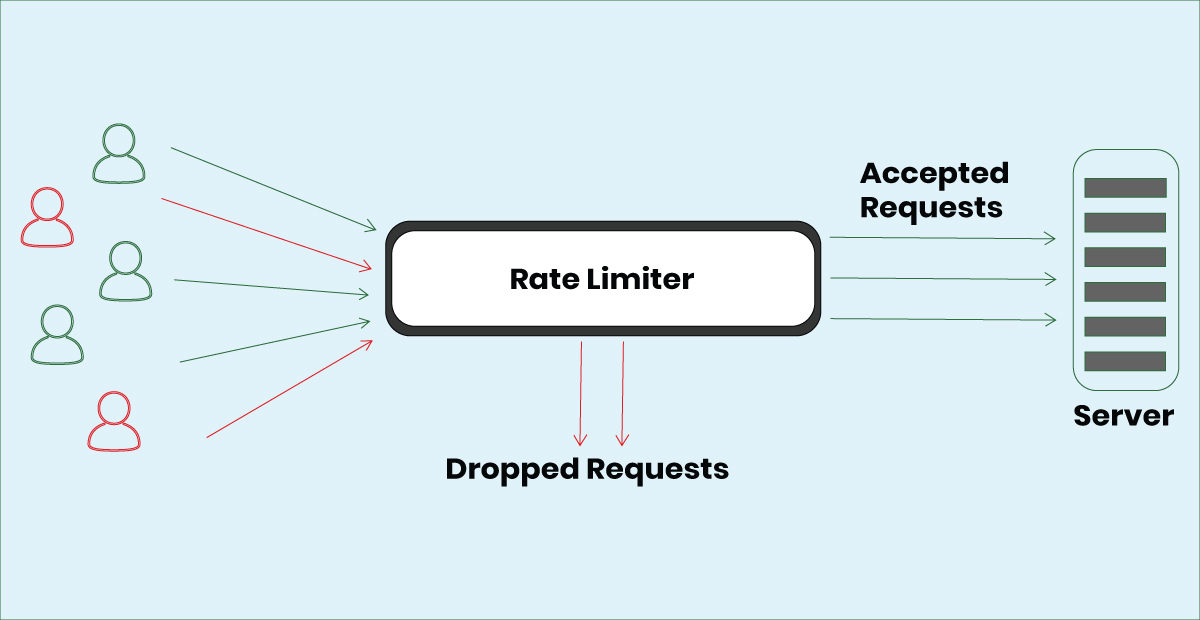

Rate limiting is a crucial defense mechanism used to manage the amount of traffic sent to a server. In the context of Distributed denial-of-service (DDoS) attacks, rate limiting helps prevent overloading the server by restricting the number of requests a user can make within a specific timeframe.

How Rate Limiting Works

Rate limiting operates by tracking users' requests, typically based on their IP addresses, and comparing the number of requests made against predefined thresholds. If a user exceeds this limit, their requests may be temporarily blocked or delayed. This mechanism prevents malicious actors from overwhelming the server with excessive traffic, which is characteristic of DDoS attacks.

For example, consider an online retail website hosted on a shared hosting plan that allows each IP address to make a maximum of 10 requests per minute. A legitimate user browsing the site might make 5 requests in one minute and have all processed successfully. However, if an attacker tries to send 50 requests in the same timeframe, the server will block any requests beyond the 10th after detecting that the limit has been exceeded.

This prevents the attacker from overloading the server while allowing real users to access the site, even during busy times. By controlling the number of requests that can be made, rate limiting helps keep the system stable and ensures that all users have fair access to resources.

Types of Algorithms for Rate Limiting

1. Fixed-Window Rate Limiting:

This algorithm counts the number of requests within a fixed time period (e.g., one minute). Once the limit is reached, further requests are denied until the next time window begins. It is simple to implement but can lead to issues where legitimate users are blocked during traffic spikes.

2. Sliding-Window Rate Limiting:

This method tracks requests over a moving time window, allowing for more flexibility compared to fixed-window rate limiting. It adjusts dynamically to varying traffic patterns, making it suitable for applications with fluctuating usage levels. However, it may still struggle against sustained high-volume attacks.

3. Token Bucket Rate Limiting:

In this algorithm, a "bucket" is filled with tokens at a set rate. Each request consumes a token; if the bucket is empty, additional requests are denied. This allows for bursts of traffic while still controlling overall request rates. It is effective for managing sudden spikes in traffic but may not handle prolonged high traffic well.

4. Leaky Bucket Rate Limiting:

Similar to the token bucket, this algorithm processes requests at a constant rate regardless of how quickly they arrive. Excess requests are queued up until they can be processed at the defined rate, which helps smooth out traffic spikes and maintain consistent service levels.

Types of Rate Limiting

1. User-Based Rate Limiting:

This method restricts access based on a specific user, often identified by their IP address, API key, or other unique identifiers (like user credentials). It is particularly useful for preventing attacks like credential stuffing (where attackers try to log in with stolen credentials) and DDoS attacks.

2. Geographic Rate Limiting:

This type of rate limiting restricts the number of requests originating from specific geographic regions or locations. It can be particularly helpful in mitigating attacks that come from certain countries or regions where traffic spikes could indicate a DDoS or other malicious activity.

3. Time-Based Rate Limiting:

This method controls the frequency of requests by using timestamps. For example, if a user makes too many requests within a short time window, the server blocks or delays further requests.

Benefits of Rate Limiting

Prevents Server Overload: Rate limiting helps prevent server overload, a common strategy in DDoS attacks. It limits the number of requests a server can handle from a single IP or user.

Reduces Impact of Malicious Traffic: DDoS attacks often flood a server with excessive requests. Rate limiting can help reduce the impact by restricting the traffic flow, ensuring that legitimate users can still access the site or service.

Improves Resource Utilization: By controlling traffic volumes, rate limiting ensures that server resources are used more efficiently, preventing them from being wasted on malicious requests.

Detects and Throttles Abnormal Traffic Patterns: Rate limiting allows you to spot abnormal traffic patterns (like a sudden surge in requests) and act quickly to mitigate the attack before it overwhelms the system.

Enhances System Reliability: Protecting systems from excessive requests helps maintain uptime, ensuring that the service stays available to legitimate users during an attack.

Easy to Implement: Rate limiting can be set up at various layers, including the application level, web server, or via a content delivery network (CDN), making it accessible to different systems.

In conclusion, rate limiting acts as a proactive shield against DDoS attacks, maintaining server integrity by filtering excessive traffic. It ensures that resources are allocated efficiently, allowing legitimate users to maintain access while reducing the impact of malicious requests.